Protecting minors from harmful calls

Project overview

Both minors and adults use the calling and chatting features of Messenger and Instagram. Minors, however, are often in disproportionate danger of harassment on these platforms, especially by unconnected bad actors: people who aren’t friends with these minors, but who will try to establish contact so that they can call them.

While minors may not always pick up when a potential harasser calls them, even unwanted calls can be harmful to their mental health. If a minor picks up the call, the potential damage caused by the call could be life-altering.

With minors’ lives literally on the line, Meta’s Remote Presence Safety & Inclusion team sought to warn minors away from unconnected callers, and empower them to protect themselves if the worst occurs.

Solution

Along with another product designer and consultation from the Messenger Trust team, which handles user protection in messaging, I co-designed and wrote several iterations of a minor protection experience in Messenger and Instagram calls.

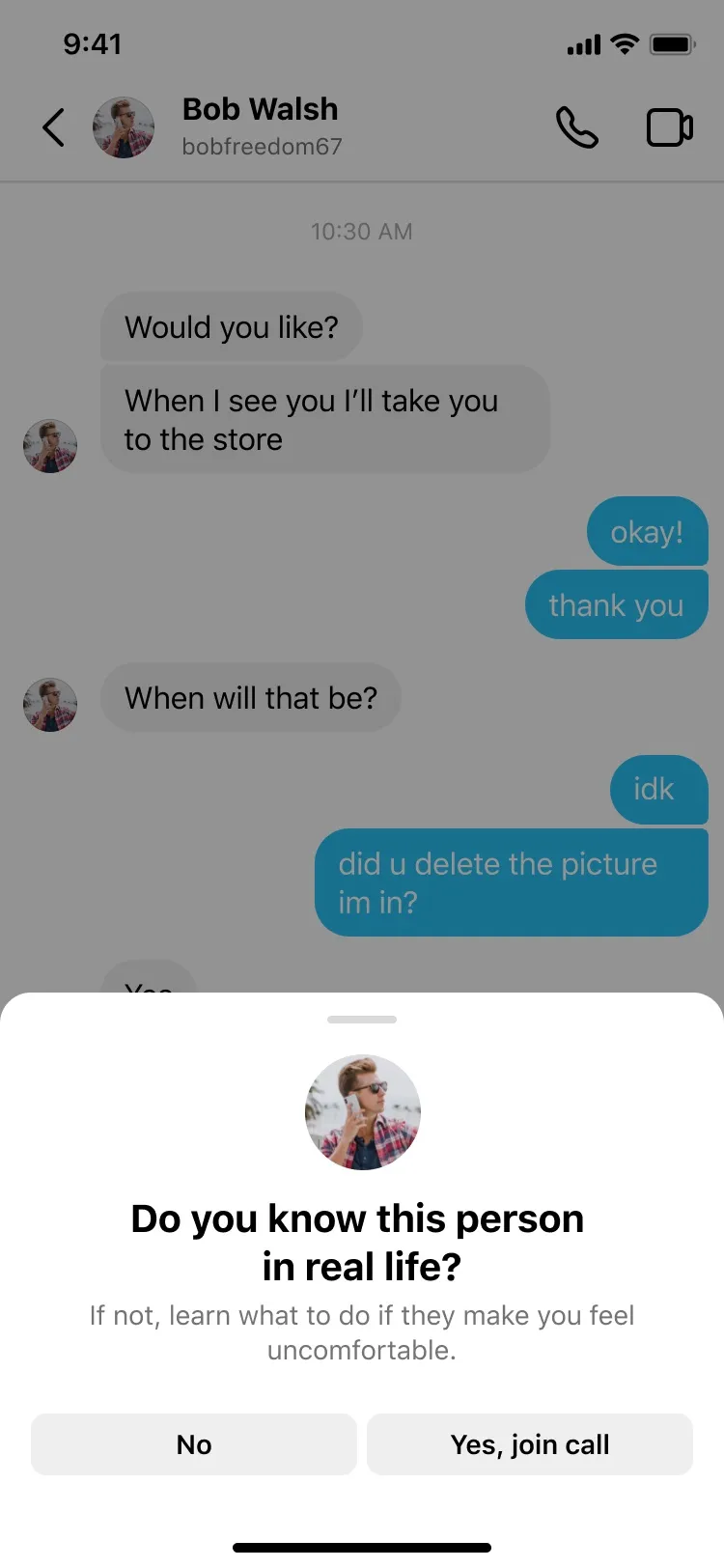

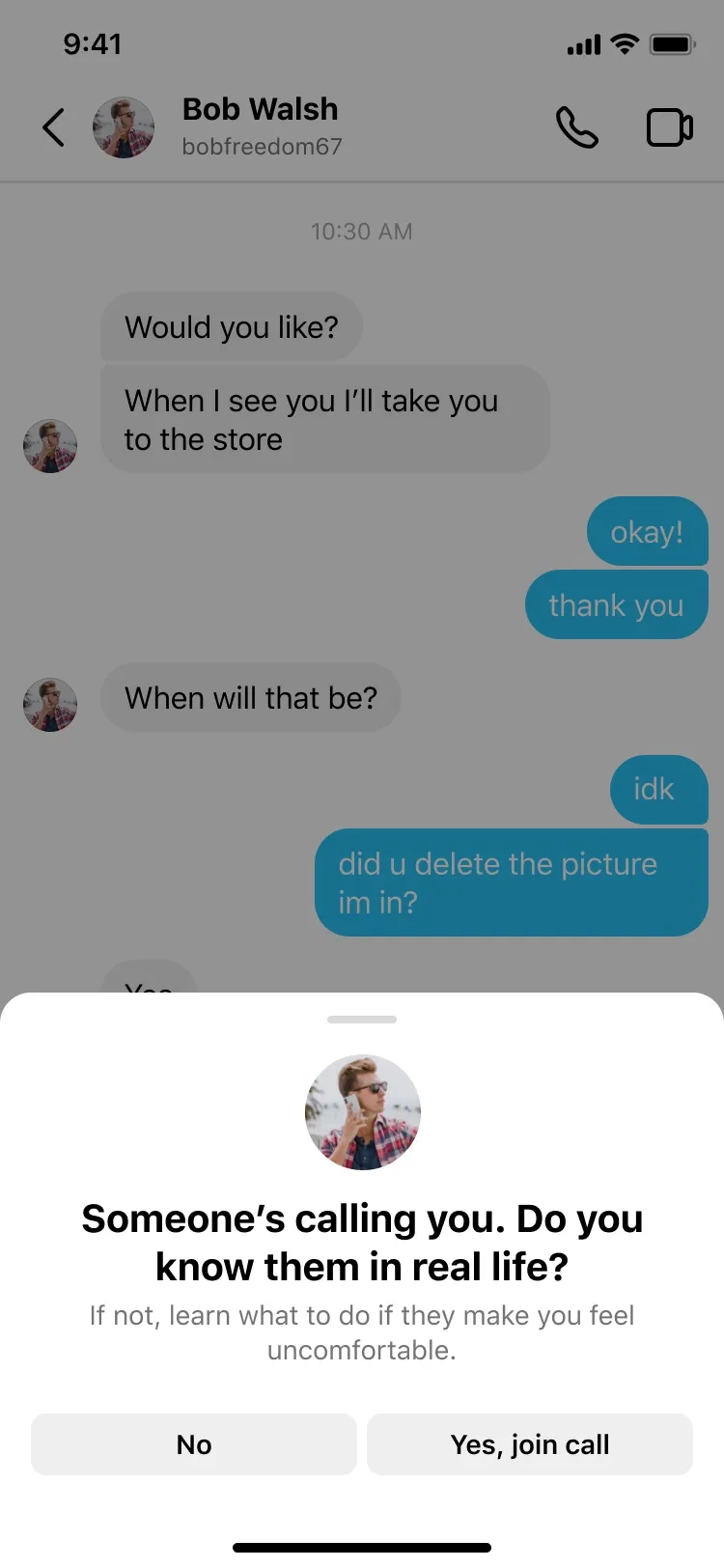

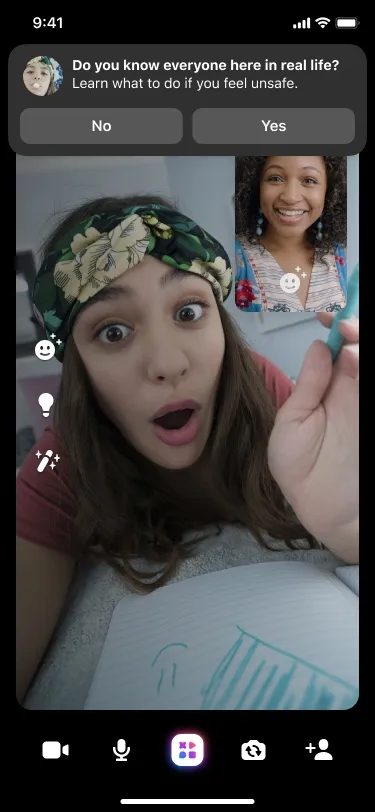

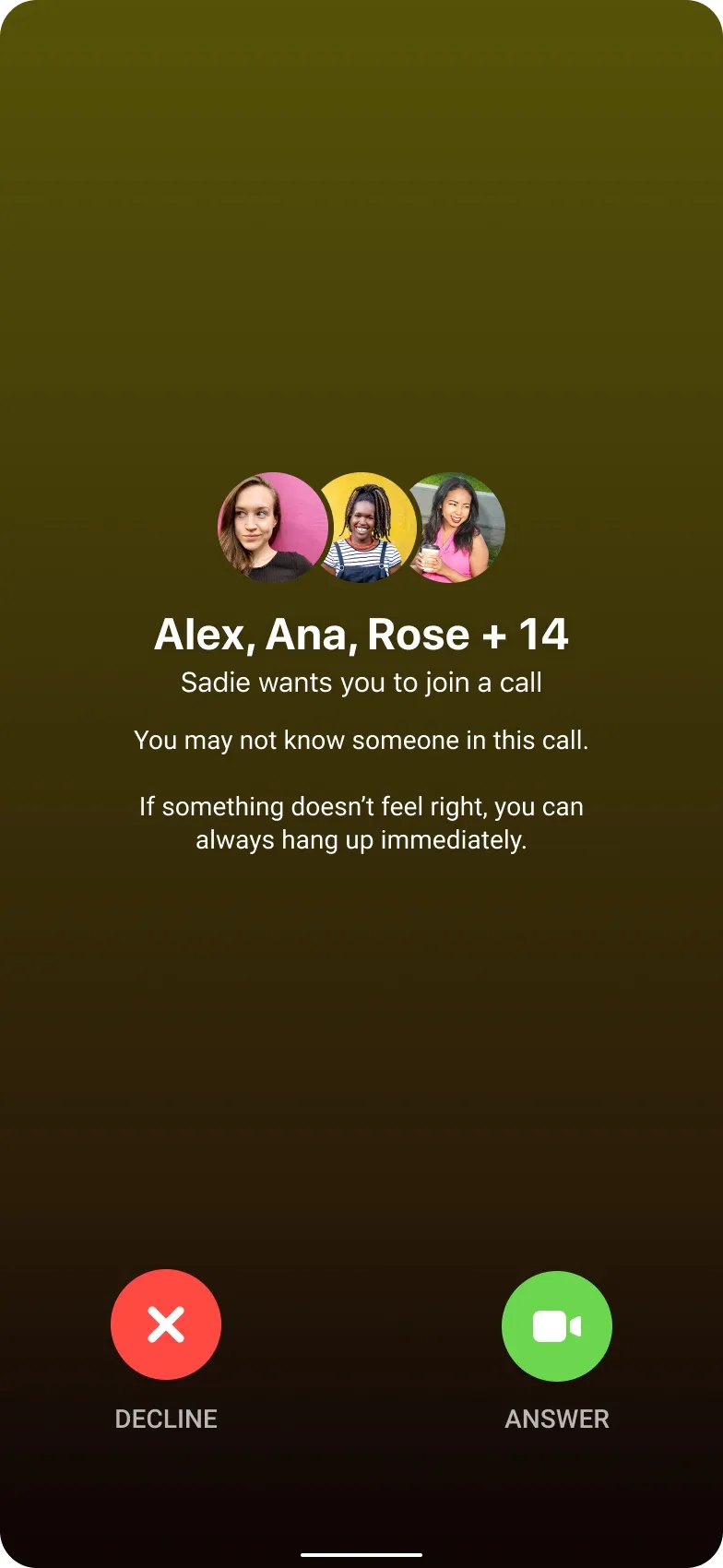

In our design, before a minor makes or accepts a call from someone Meta detects may be harmful — for instance, someone who isn’t connected to the minor, or perhaps has tried to add many minors as friends on Facebook — they see an interstitial.

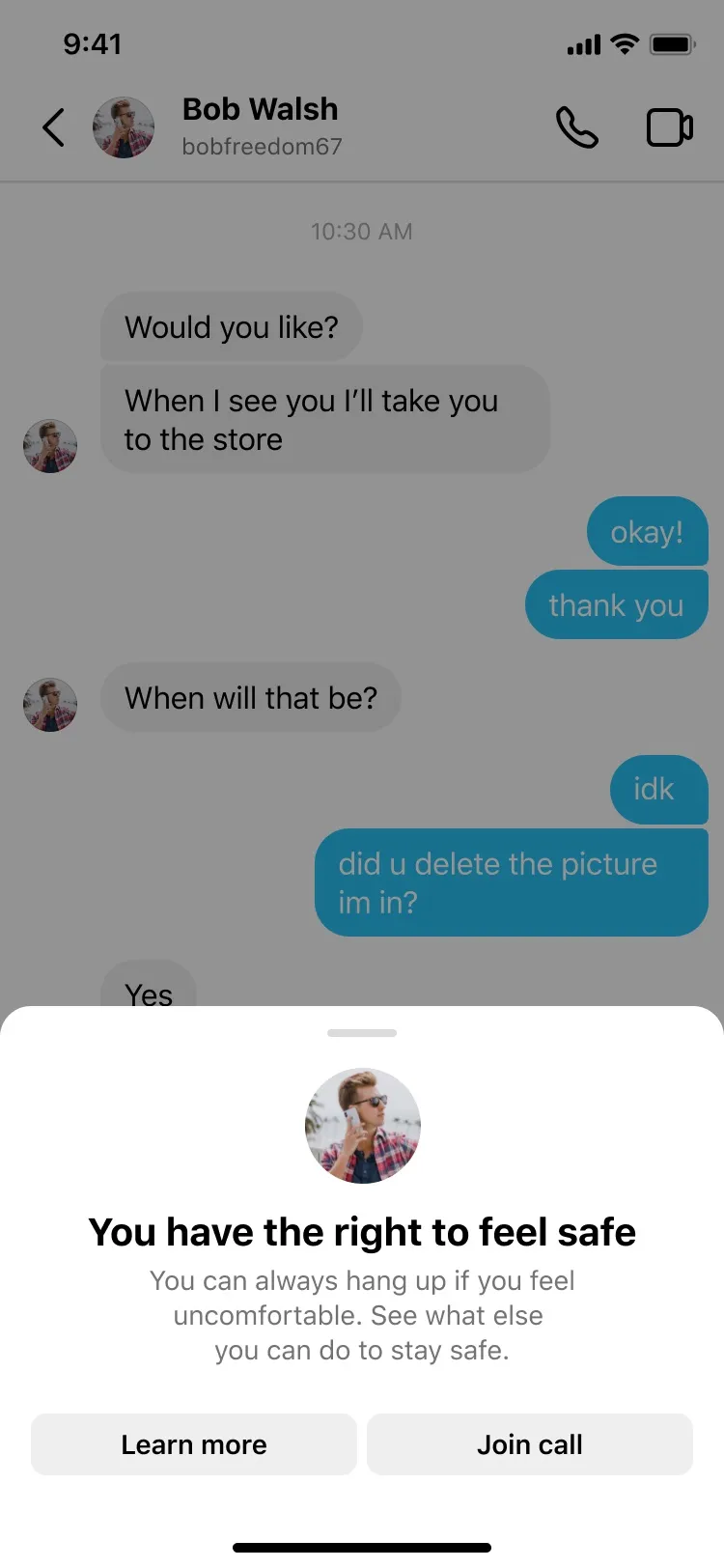

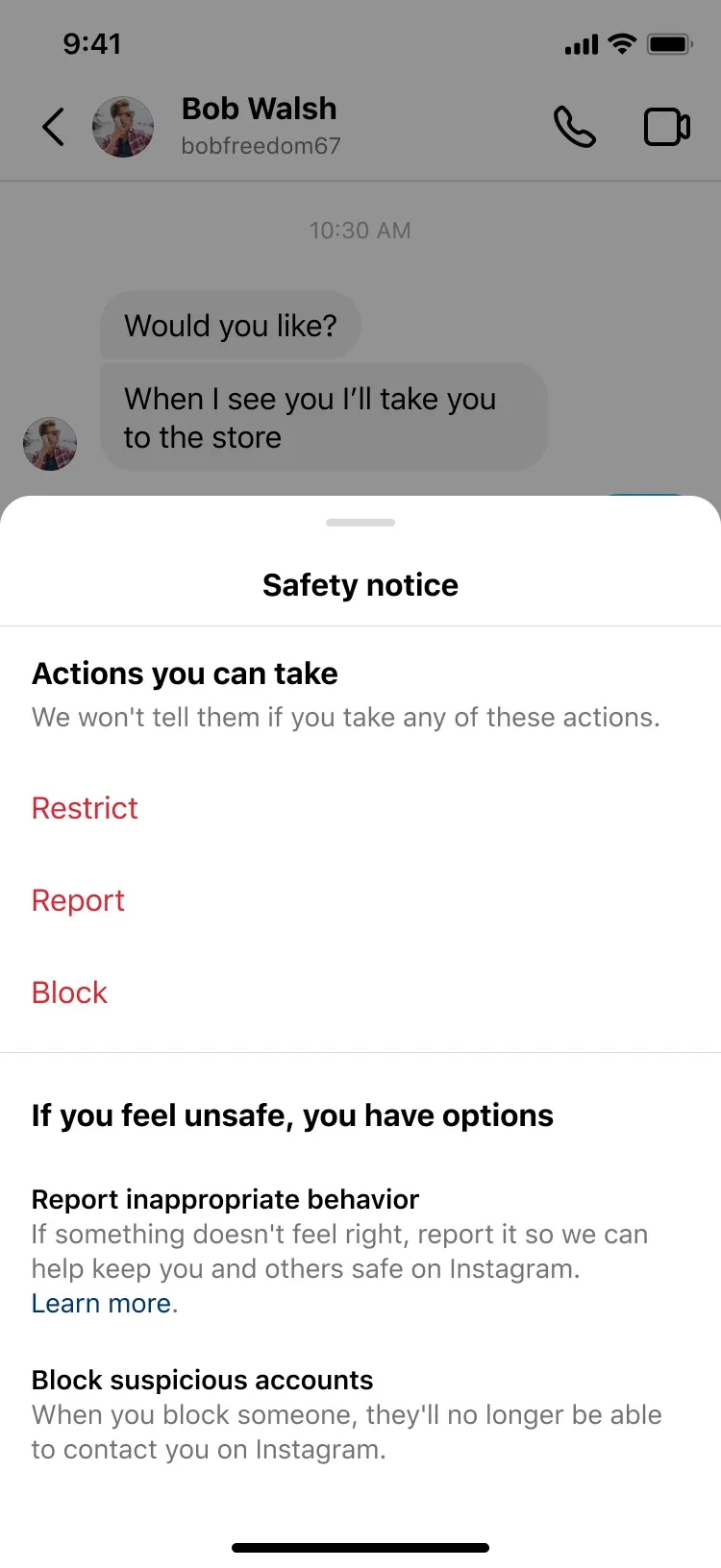

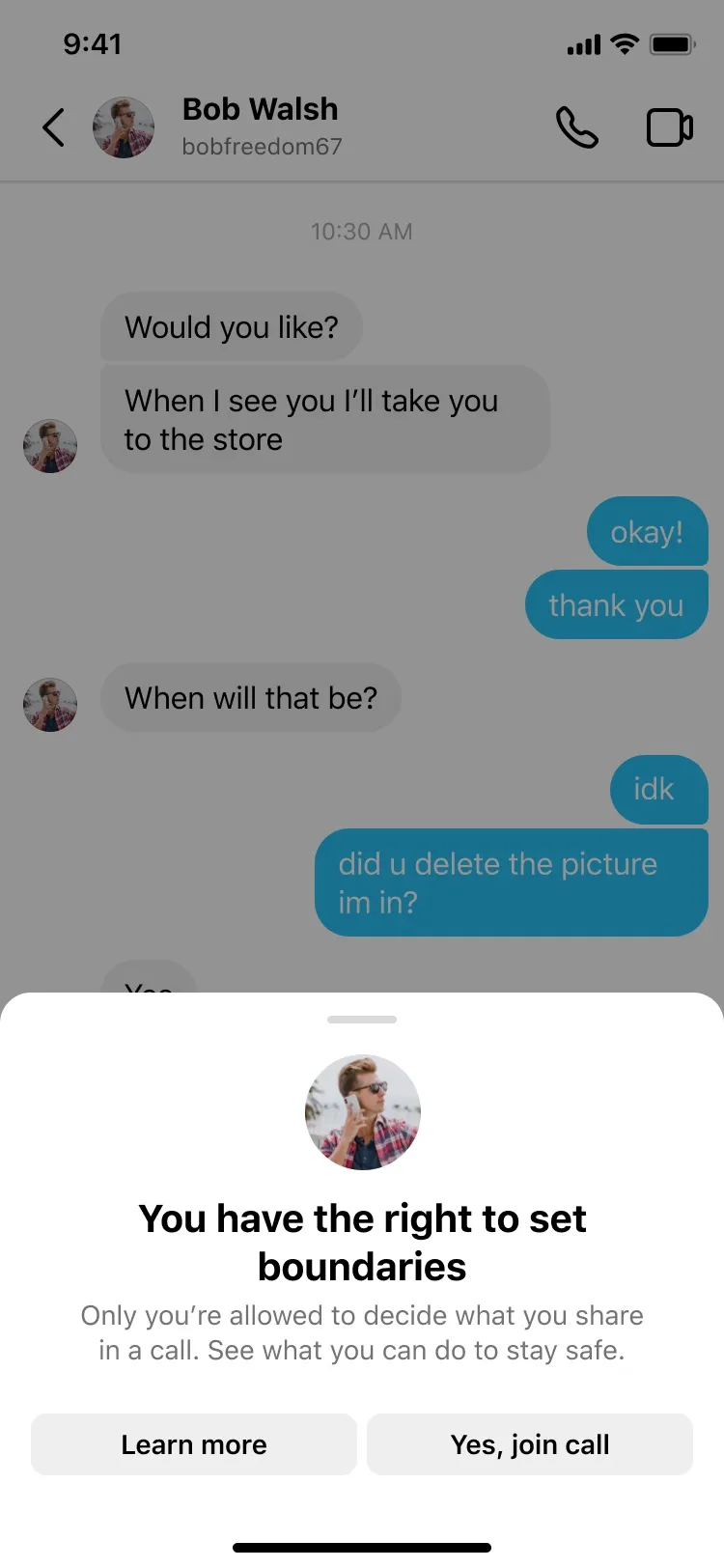

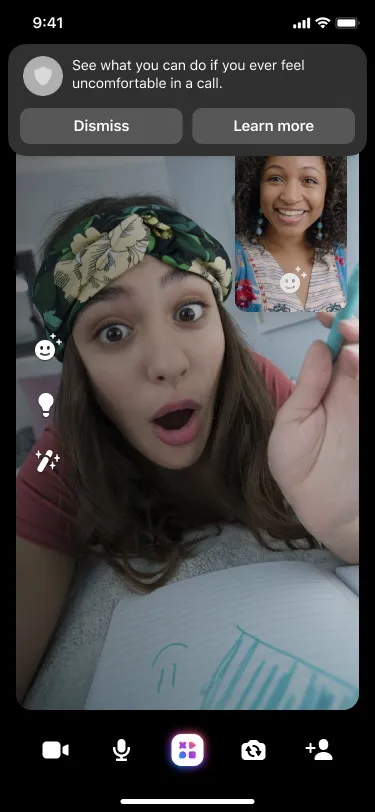

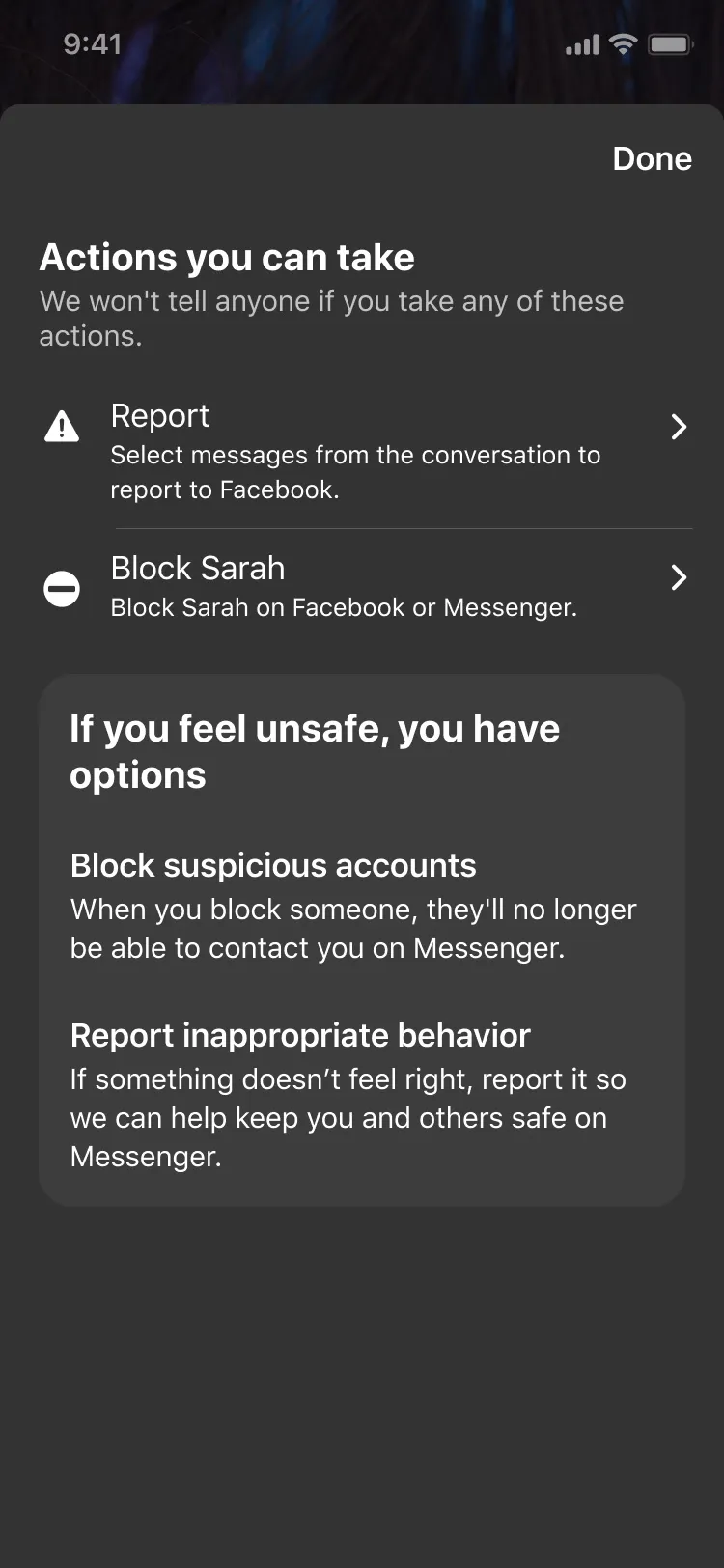

This interstitial asks the minor if they know the person in real life: a simple phrase young minors can understand quickly. Then, it offers additional help if they don’t know the person. In particular, a screen that appears after the minor taps “no” upsells the useful block, mute and restrict functions.

Additional language appears below those upsells, with detailed assistance for minors who may need it.

It's important to note that all content and designs were co-developed with child safety experts. In particular, despite the relative lengthiness of the content, it's tailored to uplevel the most relevant actions. Even if a minor doesn’t read anything below the upsells for blocking, muting and restricting, they can still make use of these actions to prevent harm.

Research

User testing helped confirm that this solution was the correct tack to take. Rolling research with minors helped me iterate on language to make it more readable, especially for younger participants. Meanwhile, surveys indicated that minors would be more inclined to decline calls made by unconnected individuals because they surmised that Meta knew there could be a problem.

In a win for content design, minors also took the time to read the longer content even without being asked to. Minors cited the empowering nature of the content, and mentioned they felt good that they knew they could have the right to back away from a difficult conversation. Since rights-based language is something child safety advocates often asked me to incorporate, this validated my approach and helped me develop content for other child safety products.

Future thinking

This project rolled out initially only to 1:1 calls, but protections in group calls quickly followed. To keep mitigating harm, we continued to monitor user feedback and metrics analysis. The pie in the sky? To produce a more holistic minor education flow in Messenger — one that doesn't wait for minors to potentially be in a call with a harmful person before educating them on safety.